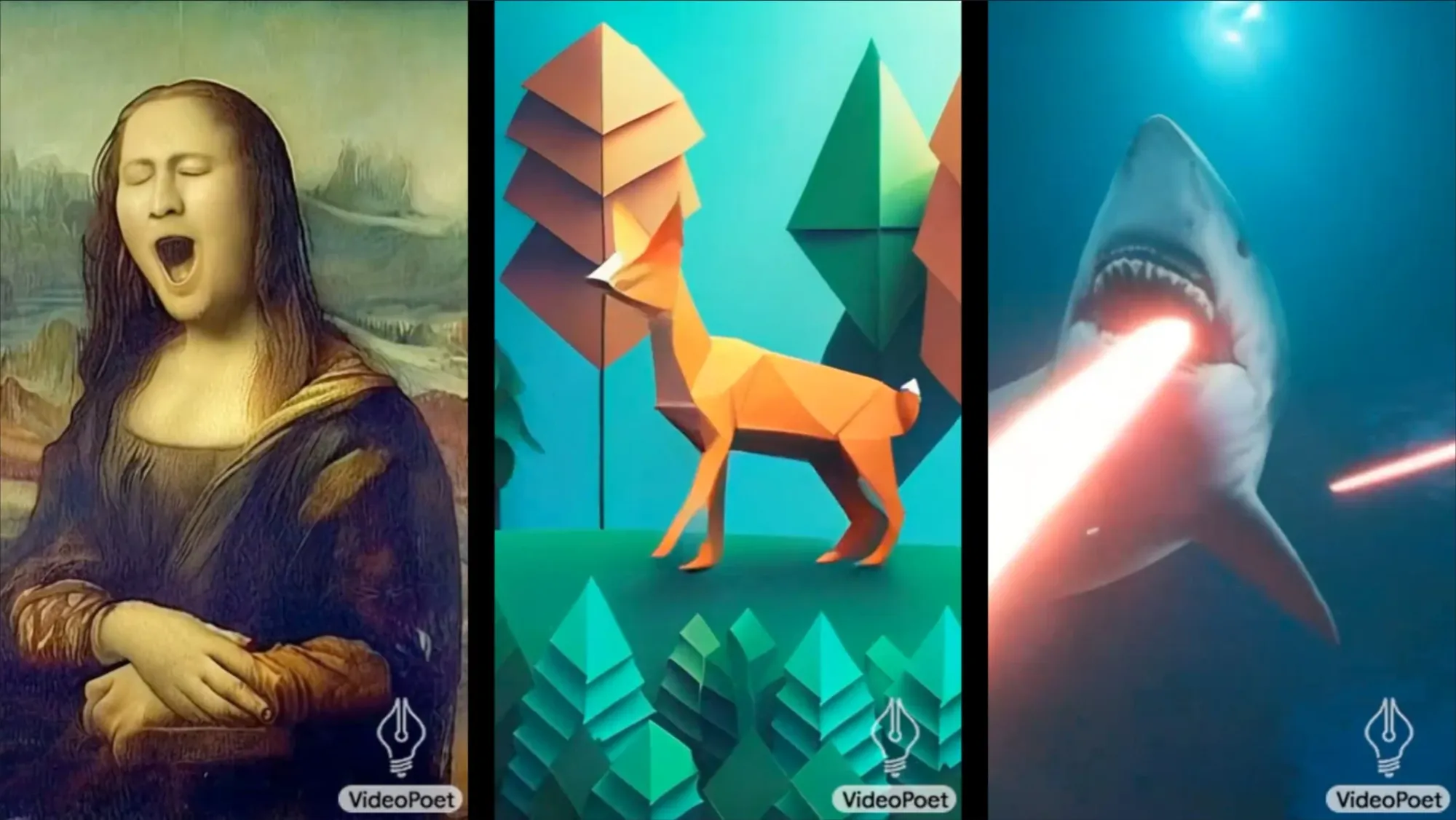

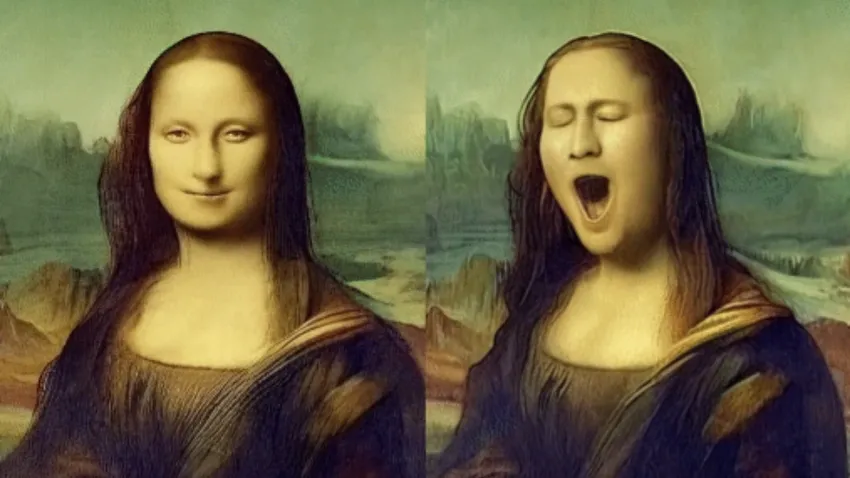

Google Research has unveiled VideoPoet, VideoPoet is a revolutionary video generation model that can produce high-motion variable-length videos from a simple text prompt. This innovative large language model (LLM) is a significant departure from prevailing video generation models that rely heavily on diffusion-based methods and pre-existing image models such as Stable Diffusion.

Google VideoPoet significantly improves over traditional video generation models, offering many exciting features and capabilities. Trained on MAGVIT-2, Google again aims for the top spot in artificial intelligence tools. As a revolutionary video generation tool, VideoPoet has the potential to empower digital content creators and influencers.

Emerging as a revolutionary game changer through VideoPoet, digital influencers can thrive on engaging content that captivates their audience. Digital content creators can elevate their storytelling as VideoPoet offers interactive editing capabilities, allowing users to extend input videos and select from a list of examples to control the desired motion finely.

Users can also compose styles and effects in text-to-video generation by simply appending a style to a base prompt. This feature allows for endless creative possibilities, making VideoPoet an invaluable tool for content creators.

Users can also compose styles and effects in text-to-video generation by simply appending a style to a base prompt. This feature allows for endless creative possibilities, making VideoPoet an invaluable tool for content creators.

Ultimately, VideoPoet has become a valuable ally, offering many creative video editing and production possibilities. Unfortunately, Google VideoPoet is not publicly available yet. While it was announced and showcased some impressive capabilities back in May 2023, it’s still currently under development and not accessible for general use.